A faint blip on a night-sky photo can feel like a secret waiting to be named, and that’s where detection starts. You want to know which patterns matter and which are luck or error, so you set rules, thresholds, and tests. You’ll wrestle with missed signals, false alarms, and trade-offs between speed and certainty — and that choice shapes what counts as knowledge.

What Detection Is: Signal, Noise, and Definitions

Think of detection as the act of deciding whether something meaningful is present in the data you observe; it’s the boundary where signal meets noise. You’ll ask whether a pattern reflects a true phenomenon or random variation, and that question guides your methods.

Detection frames what counts as a signal, how you’ll treat ambiguous measurements, and which definitions make sense for your context. You’ll apply signal interpretation to translate raw readings into meaningful claims, noting assumptions and limits.

At the same time, you’ll pursue noise reduction to improve clarity without distorting the phenomenon you want to detect. That balance matters: overzealous filtering can erase subtle signals, while under-filtering leaves you overwhelmed by spurious fluctuations.

You’ll define detection operationally — specific criteria and procedures — so decisions are reproducible. Ultimately, detection is a disciplined judgment: you choose definitions, manage noise, interpret remaining structure, and state how confident you’re in calling something present.

Sensitivity, Specificity, and Decision Thresholds

When you set a decision threshold, you’re balancing two complementary risks: missing true signals (sensitivity) and falsely flagging noise as signal (specificity). You ask: how low can detection thresholds go before noise overwhelms true events?

Raising the threshold improves specificity but reduces sensitivity; lowering it does the opposite. You’ll usually choose a point based on consequences of false negatives versus false positives, not on an abstract optimum.

To decide, quantify measurement accuracy and uncertainty. Use receiver operating characteristic curves or precision-recall analysis to visualize trade-offs and choose thresholds consistent with your objectives.

Consider prevalence of true signals and costs of errors; a rare event often demands higher specificity unless missing it’s critical. You should also test thresholds under realistic conditions, since lab measurement accuracy can overstate field performance.

Finally, document why you picked a threshold and how changes in measurement accuracy or operating conditions would shift that choice, so detection remains defensible and adaptable.

Instruments and Data Pipelines for Extracting Signal

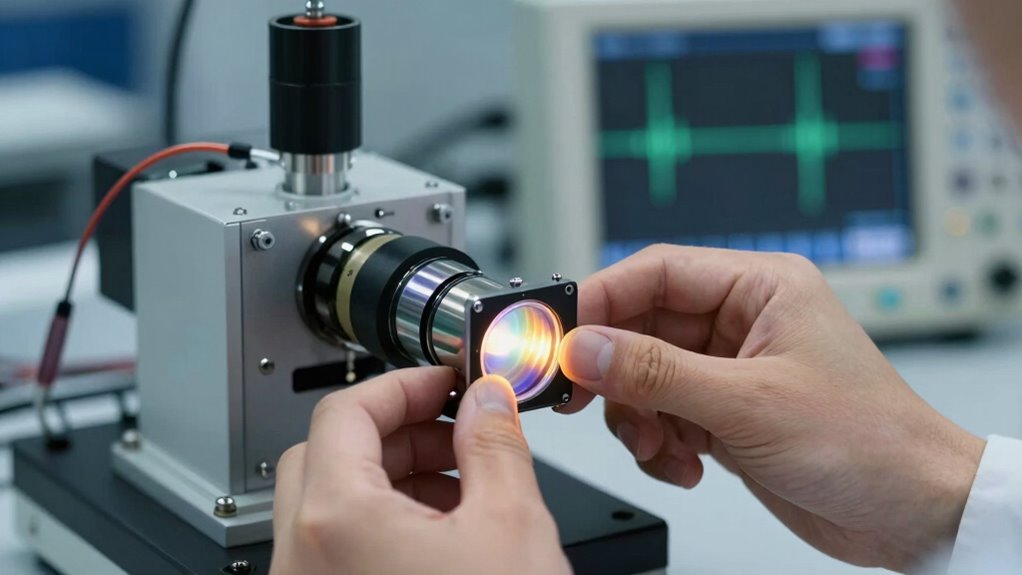

You’ll need rigorous instrument calibration protocols to guarantee measurements map reliably to real-world signals and to quantify uncertainty across operating conditions.

How will you design real-time data filtering that removes noise without erasing transient events of interest?

Balancing calibration, latency, and filter aggressiveness is central to extracting trustworthy signals.

Instrument Calibration Protocols

Because small biases in sensors or processing steps can masquerade as real signals, you need rigorous instrument calibration protocols to guarantee the integrity of data pipelines; these protocols define reference standards, correction models, and verification tests that convert raw detector output into reliable measurements.

You’ll establish calibration standards tied to traceable artifacts or simulated inputs, then document procedures that quantify instrument reliability over time and operating conditions.

You’ll derive correction models from controlled experiments, quantify uncertainty, and implement versioned metadata so every datum links to its calibration state.

Regular verification tests catch drift, while blind checks and cross-calibration with independent instruments expose systematic errors.

Real-Time Data Filtering

Although real-time data filtering must operate under tight latency and resource constraints, it’s where raw detector outputs are turned into actionable signals without letting noise, artifacts, or transient faults masquerade as events. You design pipelines that mix data preprocessing, adaptive thresholds, and real time analytics to decide what’s worth storing, alerting, or discarding. You’ll validate filters against edge cases, watching for bias that erases weak but true signals. You’ll balance compute, memory, and detection performance, and you’ll instrument telemetry to catch filter failures. Here’s a compact view of common pipeline stages and goals:

| Stage | Purpose |

|---|---|

| Ingest & preprocess | Clean, normalize, tag |

| Filtering & scoring | Suppress noise, rank events |

| Output & feedback | Persist signals, update models |

Error Rates and Statistical Confidence

When you evaluate detection systems, you need to balance how often they miss real signals versus how often they cry wolf — false negatives and false positives — because those rates determine how much trust you can place in any reported result.

You’ll examine error types explicitly: type I and type II errors map to false positives and false negatives, and you’ll quantify trade-offs with metrics like precision, recall, and receiver operating characteristic curves.

You’ll also use confidence intervals to express uncertainty around estimates, so a reported detection rate isn’t taken as absolute. Ask how wide an interval is and whether it overlaps operationally important thresholds.

Statistical power matters too: low power inflates false negatives, while multiple comparisons inflate false positives unless corrected. You should question assumptions behind p-values and model fit, and prefer transparent reporting of both point estimates and their intervals.

That way you’ll interpret detections with calibrated skepticism and make decisions grounded in quantified uncertainty.

Validating Detectors: Benchmarks, Datasets, and Protocols

You should start by asking which benchmark datasets truly reflect the conditions your detector will face and whether their composition is balanced and representative.

You’ll need to agree on standardized evaluation metrics so comparisons are meaningful and not misleading.

Finally, you should adopt reproducible testing protocols that specify data splits, preprocessing, and statistical reporting to guarantee results can be independently verified.

Benchmark Dataset Selection

Choosing benchmark datasets for validating detectors is one of the most consequential decisions you’ll make in an evaluation pipeline: it shapes what performance means, what failure modes get exposed, and whether results generalize beyond the lab.

You can’t treat benchmark diversity as optional; you must seek dataset representativeness across conditions, populations, sensors, and tasks. Ask what phenomena are missing, which edge cases break models, and whether data collection biases mimic real deployment.

Be deliberate about splits, metadata, and provenance so you can interpret results honestly. Consider emotional stakes as much as technical ones:

- Frustration when rare failures aren’t represented.

- Relief when diverse cases validate robustness.

- Anxiety over hidden collection biases.

- Confidence when datasets reflect reality.

Make selection a transparent, documented act.

Evaluation Metric Standardization

Because metrics define what “good” looks like, standardizing evaluation measures is a critical step in validating detectors: you’ll want metrics that are interpretable, comparable across studies, and aligned with real-world costs.

You should ask which evaluation criteria reflect operational priorities—precision, recall, false-alarm cost, detection latency—and adopt standard metrics that map to those priorities.

Think about units, thresholds, and aggregation methods so reported numbers mean the same thing everywhere. You’ll prefer metrics that resist gaming, reveal trade-offs, and support statistical comparison.

When proposing new measures, justify them against established metrics and show when they change conclusions.

Reproducible Testing Protocols

While standard metrics tell you what to measure, reproducible testing protocols define how measurements are made so results can be trusted and compared across studies.

You’ll wonder whether datasets, benchmarks, and procedures are rigorous enough to reveal real capability or just artifacts. Reproducibility standards matter: specify data provenance, preprocessing, random seeds, and versioned code.

Testing environments must be described and shared so others can recreate conditions. Ask whether benchmarks reflect realistic variability or narrow scenarios. Clear protocols let you diagnose failures, build confidence, and iterate.

- Document every step to inspire trust.

- Share environments to reduce guesswork.

- Use diverse benchmarks to provoke humility.

- Publish negative results to cultivate honesty.

Detection in Physics, Medicine, Cybersecurity, and Vision

Detection spans from subatomic particles to network traffic and medical scans, and you’ll see how the same core ideas—signal extraction, noise suppression, thresholding, and pattern recognition—get adapted across physics, medicine, cybersecurity, and computer vision. You’ll compare detection methods, detection applications, detection challenges, and detection advancements while keeping focus on practical outcomes.

| Domain | Typical signal | Key toolset |

|---|---|---|

| Physics | Particle hits, spectral lines | Filters, statistical tests |

| Medicine | Imaging anomalies, biomarkers | Tomography, ML classifiers |

| Cybersecurity | Anomalous flows, signatures | IDS, anomaly detection |

| Vision | Objects, scenes | CNNs, segmentation |

You’ll notice recurring patterns: calibration and validation matter, labeled data and unsupervised approaches balance differently, and false positives/negatives drive design choices. Practical detection links measurement quality to algorithmic choices; improvements often come from better sensors, richer labels, and cross-domain methods. Your role is to evaluate trade-offs and adopt methods that suit each domain’s constraints and goals.

Trade-Offs: Speed, Cost, Interpretability, and Fairness

When you weigh speed, cost, interpretability, and fairness in detection systems, you’re juggling trade-offs that shape design choices and real-world impact.

You’ll do trade off analysis against performance metrics: faster models may reduce latency but raise cost implications and obscure interpretability.

You’ll ask how speed considerations affect accuracy and whether cheaper sensors introduce bias, because fairness concerns aren’t optional.

Interpretability challenges force you to choose simpler, slower approaches or accept opaque, high-performing ones and then mitigate downstream harms.

Interpretability forces a trade-off: opt for simpler, slower clarity or accept opaque speed and then mitigate its harms.

- A rapid alert that’s biased feels like betrayal.

- A cheap solution that misses edge cases feels reckless.

- An interpretable model that’s slow feels frustrating.

- A high-performing opaque system that harms groups feels unacceptable.

You’ll measure decisions with performance metrics, probe cost implications, confront interpretability challenges, and surface fairness concerns, balancing quantifiable outputs with ethical judgment so detection actually serves people.

Emerging Challenges: Adversaries, Scale, and the Unexpected

Because attackers adapt and systems grow, you’ll face new kinds of threats that aren’t covered by traditional evaluation—adversaries will probe, spoof, and exploit corner cases while scaling increases both false positives and operational strain.

You’ll confront adversarial attacks that reveal detection limitations in models designed before rapid advancements in threat craft. As data scaling multiplies inputs and rare patterns, algorithmic bias can amplify, producing unexpected outcomes that misdirect responses or marginalize groups.

You’ll need to reassess metrics, stress-test on adversarial distributions, and simulate emerging technologies interacting in novel ways. You’ll also weigh ethical implications: who bears harm when detectors fail, and how transparency or secrecy changes risk?

You’ll ask whether current pipelines can adapt without prohibitive cost, and whether human oversight remains feasible at scale. You’ll prioritize modular defenses, continuous monitoring, and incident-forensics to detect subtle shifts.

Frequently Asked Questions

How Does Detection Relate to Causation Versus Correlation?

Detection links to causation versus correlation by highlighting signals, but you shouldn’t assume causation; you’ll use causation analysis to test mechanisms and rely on correlation interpretation to spot patterns, prompting experiments that distinguish real causes from mere associations.

Can Detection Systems Be Patented or Are They Open Scientific Methods?

Yes — detection systems can be patented if they meet patent criteria; you’ll weigh detection patentability against prior art and whether it’s an open scientific method, especially when proprietary algorithms are claimed and described precisely for novelty.

What Ethical Frameworks Guide Deployment Beyond Fairness Concerns?

You should consider ethical considerations like autonomy, privacy, accountability, and proportionality; deployment strategies must balance risk assessment, stakeholder engagement, transparency, and oversight, ensuring you question impacts, enable redress, and adapt to evolving social and legal norms.

How Do Cultural Biases Influence What Counts as a Detectable Signal?

Like a lens that tints light, you’ll find cultural perception shapes signal interpretation: societal context decides which patterns matter, so bias awareness is crucial — you’ll question assumptions, redesign sensors, and recalibrate meanings accordingly.

Can Detection Failures Lead to Legal Liability for Developers?

Yes — you can face liability implications if detection standards weren’t met and failures caused harm; you’ll need to show whether your system complied with accepted practices, documented risks, and reasonable mitigation to limit legal exposure.